- Home

- Blog

- Web Design The Future of User Interfaces

The Future of User Interfaces

-

22 min. read

22 min. read

-

William Craig

William Craig CEO & Co-Founder

CEO & Co-Founder

- President of WebFX. Bill has over 25 years of experience in the Internet marketing industry specializing in SEO, UX, information architecture, marketing automation and more. William’s background in scientific computing and education from Shippensburg and MIT provided the foundation for MarketingCloudFX and other key research and development projects at WebFX.

User interfaces—the way we interact with our technologies—have evolved a lot over the years. From the original punch cards and printouts to monitors, mouses, and keyboards, all the way to the track pad, voice recognition, and interfaces designed to make it easier for the disabled to use computers, interfaces have progressed rapidly within the last few decades. But there’s still a long way to go and there are many possible directions that future interface designs could take.

We’re already seeing some start to crop up and its exciting to think about how they’ll change our lives.  In this article are than a dozen potential future user interfaces that we’ll be seeing over the next few years (and some further into the future).

In this article are than a dozen potential future user interfaces that we’ll be seeing over the next few years (and some further into the future).

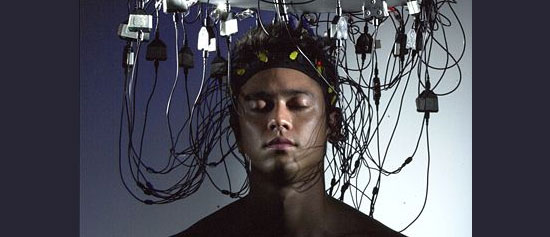

Brain-Computer Interface

What it is: In a brain-computer interface, a computer is controlled purely by thought (or, more accurately, brain waves). There are a few different approaches being pursued, including direct brain implants, full helmets, and headbands that capture and interpret brain waves.

Army Mind-Control Projects

Image source. According to an article in Time from September 2008, the American Army is actively pursuing “thought helmets” that could some day lead to secure mind-to-mind communication between soldiers.

Image source. According to an article in Time from September 2008, the American Army is actively pursuing “thought helmets” that could some day lead to secure mind-to-mind communication between soldiers.

The goal, according to the article, is a system where entire military systems could be controlled by thought alone. While this kind of technology is still far off, the fact that the military has awarded a $4 million contract to a team of scientists from the University of California at Irvine, Carnegie Mellon University, and the University of Maryland means that we might be seeing prototypes of these systems within the next decade.

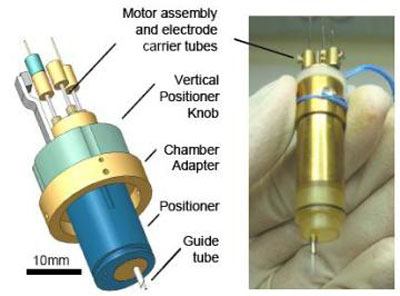

The Matrixesque Brain Interface: MEMS-Based Robotic Probe

Image source. Researchers at Caltech are working on a MEMS-based robotic probe that can implant electrodes into your brain to interface with particular neurons. While it sounds very The Matrix-y, the idea is that it could allow for advanced control of prosthetic limbs or similar body-control.

Image source. Researchers at Caltech are working on a MEMS-based robotic probe that can implant electrodes into your brain to interface with particular neurons. While it sounds very The Matrix-y, the idea is that it could allow for advanced control of prosthetic limbs or similar body-control.

The software part of the device is complete, though the micro-mechanical part (the part that actually goes into your brain) is still under development.

OCZ’s Neural Impulse Actuator

Image source. The NIA is a headband and controller that incorporates an electro-myogram, an electro-encephalogram, and an electro-oculogram to enable it to translate eye movements, facial muscle movements and brain waves. The most interesting part of the NIA is that it can be set up to work with virtually any game; the controller simply translates input into keystrokes.

Image source. The NIA is a headband and controller that incorporates an electro-myogram, an electro-encephalogram, and an electro-oculogram to enable it to translate eye movements, facial muscle movements and brain waves. The most interesting part of the NIA is that it can be set up to work with virtually any game; the controller simply translates input into keystrokes.

Biometric and Cybernetic Interfaces

What it is: In computing, cybernetics most often refers to robotic systems and control and command of those systems. Biometrics, on the other hand, refer to biological markers that every human being (and all life forms) has and that are generally unique to each person.

These are most often used for security purposes, such as fingerprint or retina scanners. Here are a few current biometric and cybernetic interface projects.

Warfighter Physiological Status Monitoring

Image source. The Military Operational Medicine Research Program is developing sensors that can be embedded into clothing to monitor soldiers’ physiological well-being. These can be used not only to monitor real-time health, but also to input additional variables into predictive models the military uses to evaluate the likely success of its missions.

Image source. The Military Operational Medicine Research Program is developing sensors that can be embedded into clothing to monitor soldiers’ physiological well-being. These can be used not only to monitor real-time health, but also to input additional variables into predictive models the military uses to evaluate the likely success of its missions.

Fingerprint Scanners

Image source. Fingerprint and hand scanners have been seen in movies for ages as high-tech security devices.

Image source. Fingerprint and hand scanners have been seen in movies for ages as high-tech security devices.

And they’ve finally become readily available within the past few years. In most cases, fingerprint scanners are used to allow or deny access to certain users for a computer system, vehicle, or controlled-access area. Because fingerprints are unique, this is a nearly-foolproof way of determining who is gaining access to something, as well as a way to track who accessed what, and when.

Digital Paper and Digital Glass

What it is: Digital paper is a flexible, reflective type of display that uses no backlighting and simulates real paper quite well.

In most cases, digital paper doesn’t require any power except when changing what it’s displaying, resulting in very long battery life in devices that use it. Digital glass, on the other hand, is a transparent display that otherwise resembles a standard LCD monitor.

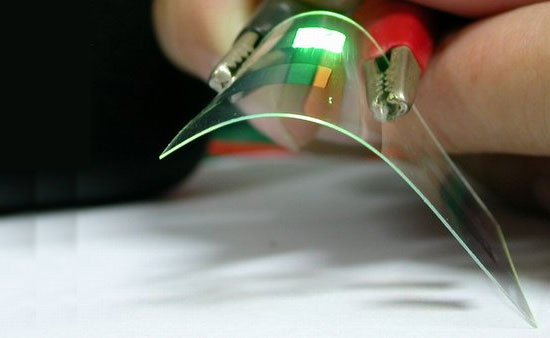

Transparent OLED Display

Samsung showcased a prototype of a new, transparent OLED display on a notebook at CES 2010. The display is unlikely to appear in notebooks in its finished form, but it might be used in MP3 players or advertising displays in the future according to the company.

Samsung showcased a prototype of a new, transparent OLED display on a notebook at CES 2010. The display is unlikely to appear in notebooks in its finished form, but it might be used in MP3 players or advertising displays in the future according to the company.

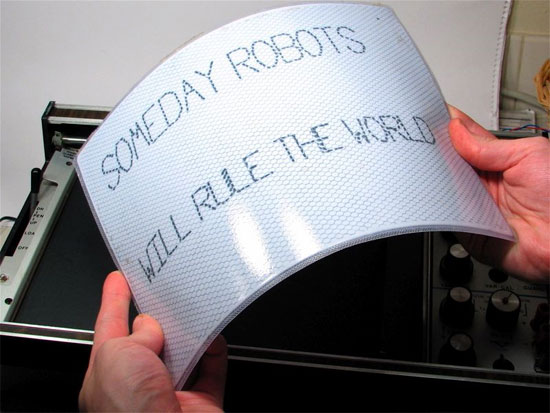

LG 19″ Flexible Display

Image source. Flexible e-paper displays might replace paper one day. Unlike their rigid counterparts, e-paper can be nearly as flexible as real paper (or card stock, at least), and almost as thin. LG has created a 19″ e-paper display that’s flexible and made of metal foil so it will always return to its original shape.

Image source. Flexible e-paper displays might replace paper one day. Unlike their rigid counterparts, e-paper can be nearly as flexible as real paper (or card stock, at least), and almost as thin. LG has created a 19″ e-paper display that’s flexible and made of metal foil so it will always return to its original shape.

Watch for this type of display to become popular for reading newspapers or other large-format content in the future.

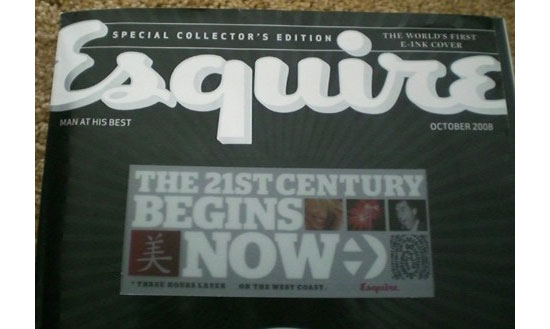

E-Ink

Image source. E-Ink technology is an interesting technology that has many interesting implications in the packaging and media industries. E-ink is a proprietary paper technology that’s already seen some real-world use (such as on the Esquire cover from October 2008). While it’s currently only available in grayscale, it’s likely to be available in full color before too long.

Image source. E-Ink technology is an interesting technology that has many interesting implications in the packaging and media industries. E-ink is a proprietary paper technology that’s already seen some real-world use (such as on the Esquire cover from October 2008). While it’s currently only available in grayscale, it’s likely to be available in full color before too long.

E-Ink is famous for its integration into many popular eBook readers, including the Kindle, Barnes & Noble’s Nook, and the Sony Reader. And while in these instances it’s put within a rigid display, there’s no reason it can’t be.

Telepresence

What it is: Telepresence consists of remote control of a drone or robot. They’re most commonly seen in the scientific and defense sectors, and vary considerably based on what they’re being used for.

In some cases, those controlling the device only get visual input, but in others (such as medical telepresence devices) a more complete simulation is created. Below are some of the best telepresence projects currently underway.

Telepresence Surgery

Minimally invasive surgery can now be conducted via telepresence, using a robot to perform the surgery on the actual patient, while a surgeon controls it remotely. In fact, this method of surgery can actually work better than using long, fulcrum instruments to perform the surgery in person.

The technology combines telerobotics, sensory devices, stereo imaging, and video and telecommunications to give surgeons the full sensory experience of traditional surgery. Surgeons are provided with feedback in real-time, including the pressure they would feel when making an incision in a hands-on surgery.

Universal Control System

Image source. The Universal Control System is a system developed by Raytheon (a defense contractor) for directing aerial military drones. The interface is not unlike a video game, with multiple monitors to give operators a 120º view of what the drone sees.

Image source. The Universal Control System is a system developed by Raytheon (a defense contractor) for directing aerial military drones. The interface is not unlike a video game, with multiple monitors to give operators a 120º view of what the drone sees.

Raytheon looked at the existing technology drone pilots were using (which consisted of standard computer systems—a nose-mounted camera and a keyboard) and realized that there were betters systems being used by gamers. So they set about developing a drone operation system based on civilian business games (and even hired game developers). The finished system also incorporates augmented reality and other futuristic interface elements.

Space Exploration and Development

Telepresence could be used to allow humans to experience space environments from the safety of earth.

This technology could allow people to remotely explore distant planets without having to leave our own planet, and for a lot less than an actual manned mission. The biggest hurdles to this technology at the moment are delays in communications over long distances, though there are already advances happening in those areas that may make it a non-issue within the next few years.

Augmented Reality

What it is: Augmented reality consists of overlaying data about the real world over real-time images of that world. In current applications, a camera (generally attached to either a computer or cell phone) captures real-time images that are then superimposed with information gathered based on your location.

There are a number of current augmented reality projects in the works. Here are some of the most interesting ones.

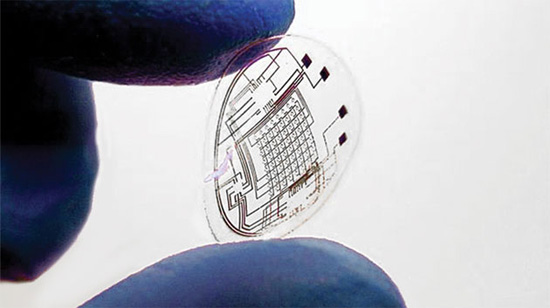

Augmented Reality in a Contact Lens

Image source. One of the more interesting current projects with augmented reality consists of a display contained within a contact lens. The conduit between the eye and the brain is much faster than a high-speed internet connection—and the eye can perceive more than we realize— including millions of colors and tiny shifts in lighting.

Image source. One of the more interesting current projects with augmented reality consists of a display contained within a contact lens. The conduit between the eye and the brain is much faster than a high-speed internet connection—and the eye can perceive more than we realize— including millions of colors and tiny shifts in lighting.

Because of this, it makes sense that an interface that works directly with your eye would catch on. The current proofs of concept include contact lenses being developed at the University of Washington. They’re crafting lenses with a built-in LED that can be powered wirelessly with Radio Frequency and other simple electronic circuits.

Eventually, these contact lenses will contain hundreds of tiny LEDs that can display images, words, and other information in front of the eye. It’s likely that these contact lenses will be the display for a separate control unit (such as a smartphone).

Wearable Retinal Display

Image source. The Universal Translator made communication between species possible in the Star Trek universe. And while a Universal Translator like that is likely a long ways off, NEC is already working on a retinal display called the “Tele Scouter” that will translate foreign languages into subtitles for the wearer.

Image source. The Universal Translator made communication between species possible in the Star Trek universe. And while a Universal Translator like that is likely a long ways off, NEC is already working on a retinal display called the “Tele Scouter” that will translate foreign languages into subtitles for the wearer.

The device is mounted on frames from eyeglasses and includes both a display and a microphone. The sound is transmitted to a separate device that sends it to a central server for translation, and then the subtitles are sent back to the device and displayed on the retinal display. The best part is that the text is displayed within the user’s peripheral vision, which means they can keep eye contact with the person they’re speaking with.

Heads-Up Display

Image source. There’s one type of augmented reality that’s been around for years, first seen in military applications and then eventually in the commercial airline and automotive industries.

Image source. There’s one type of augmented reality that’s been around for years, first seen in military applications and then eventually in the commercial airline and automotive industries.

Heads-up displays (HUDs) are used to display data on the windshield of a car or plane without requiring the operator to look away from their surroundings. In cars, HUDs are helpful at night to driving conditions on the windshield of the vehicle. This allows drivers to keep their attention on the road ahead.

In the future, HUDs will be used for synthetic vision systems. In other words, everything a user sees in their viewport would be constructed from information obtained in a database, rather than an actual real world-view. This type of system is still a long ways off, but could change the way vehicles are designed, and can make for safer aircraft and automobiles because the driver/operator wouldn’t need a direct line-of-sight to their surroundings.

Privacy Concerns with Augmented Reality

Of course, privacy specialists will have a field day with Augmented Reality applications.

Of course, privacy specialists will have a field day with Augmented Reality applications.

After all, what happens when you can easily look at a person and gain access to their personal information via facial recognition. The technology to do that isn’t too far off. You’ll simply look at a person across a crowded restaurant and their name, Facebook and Twitter accounts, phone number, and any other available information will be at your fingertips.

While this could certainly come in handy (such as those times when you find yourself confronted with someone who seems to know you, but you have no clue who they are), it could also make it near effortless for just about anyone to access your information. In fact, technology like this is already starting to pop up.

Voice Control

What it is: We’ve seen voice control in various sci-fi movies and novels for years. Just like its name implies: this technology relies on voice commands to control a computer.

Voice control has been around in some form for a few years now, but its application is just recently being explored. Here are a few current projects.

BMW Voice Control System

Leave it to luxury automaker BMW to develop a new voice control system that allows drivers to control their navigation and entertainment systems. A single voice command lets drivers get directions to their destination or play a specific song.

While other automakers have tried similar voice recognition systems, this one appears to be the most advanced.

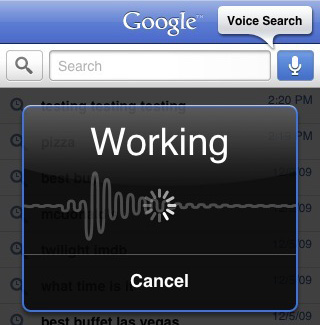

Google Voice Search

Image source. If you have a smartphone running Android, you’re probably already familiar with Google’s Voice Search feature. While it’s not foolproof, it’s definitely a great way to look something up without having to spend a minute typing in a complex search term. The best part about Google’s Voice Search is that it’s not just restricted to the Android platform.

Image source. If you have a smartphone running Android, you’re probably already familiar with Google’s Voice Search feature. While it’s not foolproof, it’s definitely a great way to look something up without having to spend a minute typing in a complex search term. The best part about Google’s Voice Search is that it’s not just restricted to the Android platform.

It will also work with your BlackBerry, iPhone, Windows Mobile phone or Nokia S60. Voice search is handy if you’re trying to look something up in a hurry or while driving. The Android platform will also interface with navigation, which is handy if you’re behind the wheel.

Gesture Recognition

What it is: With gesture recognition, movements with the hands, feet, or other body parts are interpreted by a computer (often through the use of either a hand-held controller, a camera that captures movement, or some other input device like gloves) as commands. Gesture recognition’s popularity is due to the video gaming industry, though there are a number of other potential uses.

Acceleglove: Gloves that Recognize Sign Language

Image source. Researchers at the George Washington University have created a glove called the “Acceleglove” that will recognize American Sign Language gestures and translate them into text.

Image source. Researchers at the George Washington University have created a glove called the “Acceleglove” that will recognize American Sign Language gestures and translate them into text.

It works by using a series of accelerometers on each finger of the glove along with other sensors on the shoulders and elbows to send electrical signals to a microcontroller than finds the correct work associated with the movement. The unit determines signs based on starting hand positioning, intervening movements, and the ending gesture, eliminating phrases at each step along the way. It takes milliseconds for the computer to output the correct word after the sign is completed.

Gesture-Based Control for TVs

Image source. Television, because of its simplified user interface, is a perfect candidate for control by gesture recognition.

Image source. Television, because of its simplified user interface, is a perfect candidate for control by gesture recognition.

And examples of gesture recognition control for TVs are already available. At the 2009 International Consumer Electronics Show there were a few examples of gesture control for TVs. Panasonic has developed a remote control that has touch screens where finger gestures control various things. But Hitachi has come out with a TV that uses a 3-D depth camera to recognize gestures on a much larger scale. It lets you use hand gestures to change the channel, control the volume, and even to turn the TV on and off.

Nintendo Wii

Image source. The Nintendo Wii’s controller system is probably the first widely-adopted gaming system that uses gesture recognition for at least part of its control method.

Image source. The Nintendo Wii’s controller system is probably the first widely-adopted gaming system that uses gesture recognition for at least part of its control method.

Of course, the Wii’s gesture recognition system requires that you hold the special Wii Remote and Nunchuk in order to have your gestures recognized, but it’s still a pioneering system within the gaming industry. And in the future, it’s likely that other systems, not just for gaming but in the computer industry in general, will adopt similar control systems.

Xbox Project Natal

Project Natal takes the Wii’s gesture recognition a step further. No remote or controller is required; users simply interact with what’s on screen as they would in the real world.

Project Natal takes the Wii’s gesture recognition a step further. No remote or controller is required; users simply interact with what’s on screen as they would in the real world.

In other words, to kick a ball, just perform a kick motion. It eliminates the need for controllers and makes gaming more immersive.

Head and Eye Tracking

What it is: Head and eye tracking technology interprets natural eye and head movements to control your technology.

Gran Turismo 5

Image source. Gran Turismo has long been heralded as one of the most realistic racing games out there. But with Gran Turismo 5, they’ve gone a step further.

Image source. Gran Turismo has long been heralded as one of the most realistic racing games out there. But with Gran Turismo 5, they’ve gone a step further.

The newest version of the game will include head tracking capabilities. The PlayStation Eye camera will track a player’s head and control the view within the cockpit of the car. This will make the overall experience much closer to what you actually experience while driving, where you can glance to one side or the other quickly without entirely losing sight of what’s in front of you.

Pseudo-3D with a Generic Webcam

Chris Harrison has come out with a head tracking system that works with a standard webcam.

It’s available for Mac OSX (not including 10.6) and can be used with any number of 3D interfaces. The most interesting thing is that this kind of technology can easily be made to work using existing technologies.

Artificial Intelligence

What it is: Artificial Intelligence (AI) consists of creating inorganic systems capable of learning from human input. While we’ve already created systems that are decent at mimicking learning behavior, they’re still limited by their code.

Eventually, computers will be able to learn and grow beyond their programming. It’ll only be a matter of time before we see The Skynet Funding Bill passing (it’s already 13 years behind). Below are some of the more interesting AI projects currently being considered and undertaken.

Cyber Security Knowledge Transfer Network

In the UK, police are looking into how AI can be used to for counter-terrorism surveillance, data mining, masking online identities, and preventing internet fraud.

They’re also looking at how intelligent programs could capture useful information and preserve images of hard drives over the web. Digital forensics could become much more efficient with the assistance of artificial intelligence, so expect to see a lot more projects in the coming years that incorporate AI with law enforcement.

AI for Adaptive Gaming

Image source. Artificial intelligence will create more realistic and engaging gameplay. Rather than relying solely on pre-programmed interactions, AI can allow games to adapt to their player’s mid-game.

Image source. Artificial intelligence will create more realistic and engaging gameplay. Rather than relying solely on pre-programmed interactions, AI can allow games to adapt to their player’s mid-game.

While there are some technologies being employed that simulate artificial intelligence in video games, true AI hasn’t yet been achieved. Newer technologies, like dynamic scripting, could bring game AI to a new level, leading to more realistic gameplay.

AI for Mission Control

NASA and other world space agencies are actively looking into artificial intelligence for controlling probes that might explore star systems outside our own. Because of delays in radio transmissions, the further away a probe gets, the longer it takes to communicate with it and control it.

But AI may eventually make the need for direct control nearly disappear. These probes would be able to react intelligently to new stimuli, and could carry out more abstract orders rather than having to have every minute movement preprogrammed or transmitted on the go.

Virtual Assistants

Image source. The need for an assistant to handle the mundane tasks of everyday life is growing greater for many people. However, your current options right now are limited, especially for the majority of us who can’t afford a personal assistant.

Image source. The need for an assistant to handle the mundane tasks of everyday life is growing greater for many people. However, your current options right now are limited, especially for the majority of us who can’t afford a personal assistant.

But soon, we’ll have virtual assistants available that will be able to make a reservation for us, find a gift for your grandmother’s 75th birthday, or do all the research for your next project. While the degree of actual AI and just extremely intelligent programming will vary, there are definite potential applications for a true AI system in all this.

Multi-Touch

Image source. What it is: Multi-touch is similar to gesture recognition, but requires the use of a touch screen. A traditional touch screen could accept input from only one point on the screen at a time.

Image source. What it is: Multi-touch is similar to gesture recognition, but requires the use of a touch screen. A traditional touch screen could accept input from only one point on the screen at a time.

Multi-touch, on the other hand, can accept input from multiple points simultaneously. There are already a number of products that include multi-touch, though the technology still has a lot of untapped potential.

Microsoft Surface

Microsoft’s Surface technology is a large-scale multi-touch system that’s particularly suited to being built into things like tables or retail displays. Surface is in use in a variety of places, including Disney’s Tomorrowland resort and during MSNBC’s election coverage.

Microsoft’s Surface technology is a large-scale multi-touch system that’s particularly suited to being built into things like tables or retail displays. Surface is in use in a variety of places, including Disney’s Tomorrowland resort and during MSNBC’s election coverage.

Because of Surface’s large scale and likely uses, it accepts input not just from multiple fingers at once, but from multiple users at once. This makes it particularly suited to public spaces. In addition to multi-touch, Surface also has object recognition capabilities, which allow users to place physical objects on the screen to trigger different digital responses.

Apple Products

Image source. Apple has been a leader in implementing multi-touch technology for a few years now.

Image source. Apple has been a leader in implementing multi-touch technology for a few years now.

The iPhone was the first mainstream consumer product to use multi-touch, and it has appeared on the iPod Touch, MacBook track-pad, the Mighty Mouse and soon, the iPad. Multi-touch has become a key part of the Mac OSX user experience, and the iPhone OS. Everything from scrolling to zooming in and out, to custom gestures can be carried out using the multi-touch interface.

Mobile Phones

In addition to the iPhone, a number of other mobile devices have multi-touch capabilities.

The Palm Pre and Pixi, the Motorola Droid (though multi-touch is disabled in the U.S.), and the HTC Hero and HD2 all have multi-touch capabilities. For the most part, these phones use multi-touch for simple tasks like zooming in and out when browsing the web. Usability is greatly improved in most cases because of the inclusion of multi-touch, especially when it comes to manipulating on-screen graphics and images.

What user interface are you most excited about?

What user interface technologies and projects are you eager to see the most?

Share your thoughts in the comments.

Related Content

-

President of WebFX. Bill has over 25 years of experience in the Internet marketing industry specializing in SEO, UX, information architecture, marketing automation and more. William’s background in scientific computing and education from Shippensburg and MIT provided the foundation for MarketingCloudFX and other key research and development projects at WebFX.

President of WebFX. Bill has over 25 years of experience in the Internet marketing industry specializing in SEO, UX, information architecture, marketing automation and more. William’s background in scientific computing and education from Shippensburg and MIT provided the foundation for MarketingCloudFX and other key research and development projects at WebFX. -

WebFX is a full-service marketing agency with 1,100+ client reviews and a 4.9-star rating on Clutch! Find out how our expert team and revenue-accelerating tech can drive results for you! Learn more

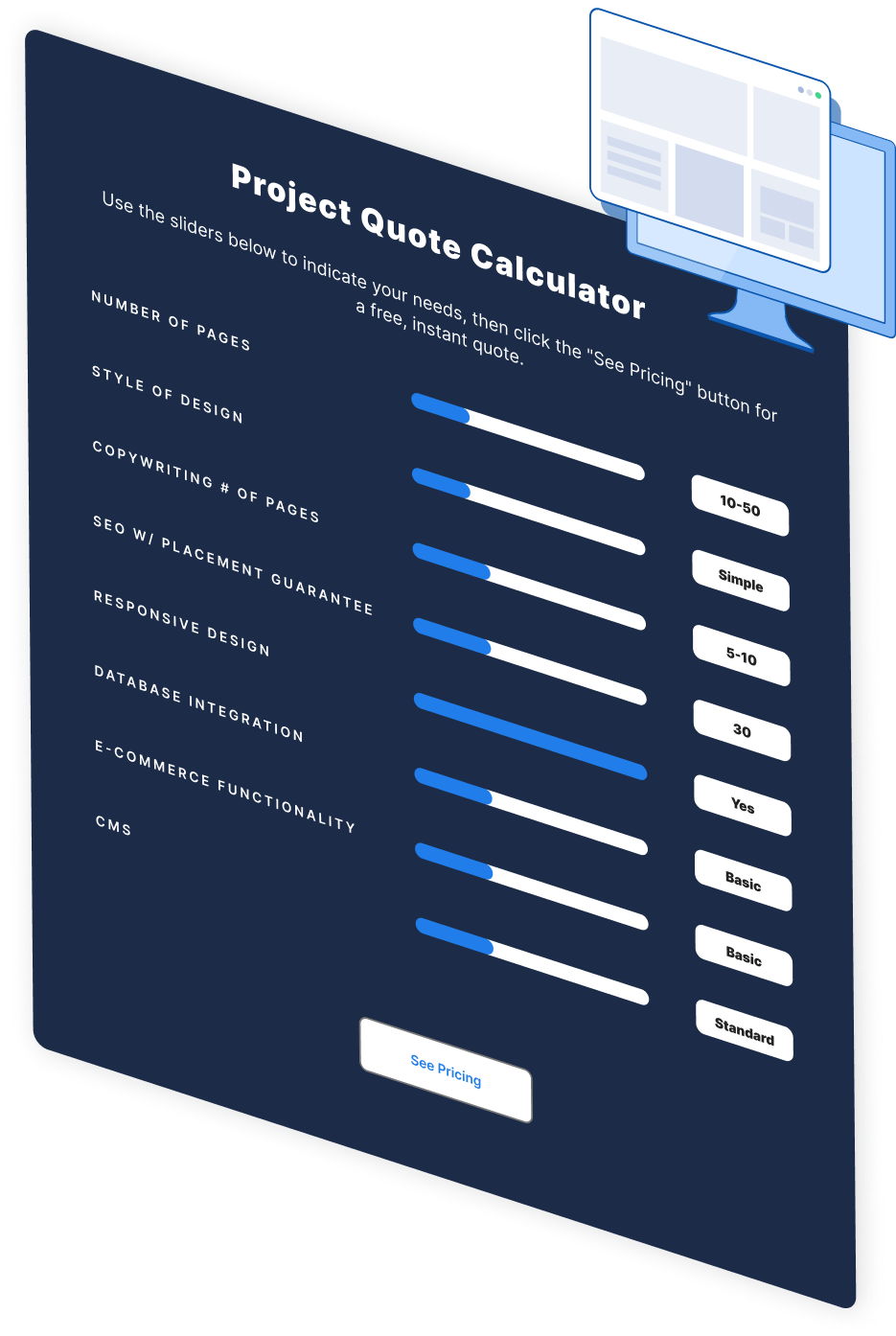

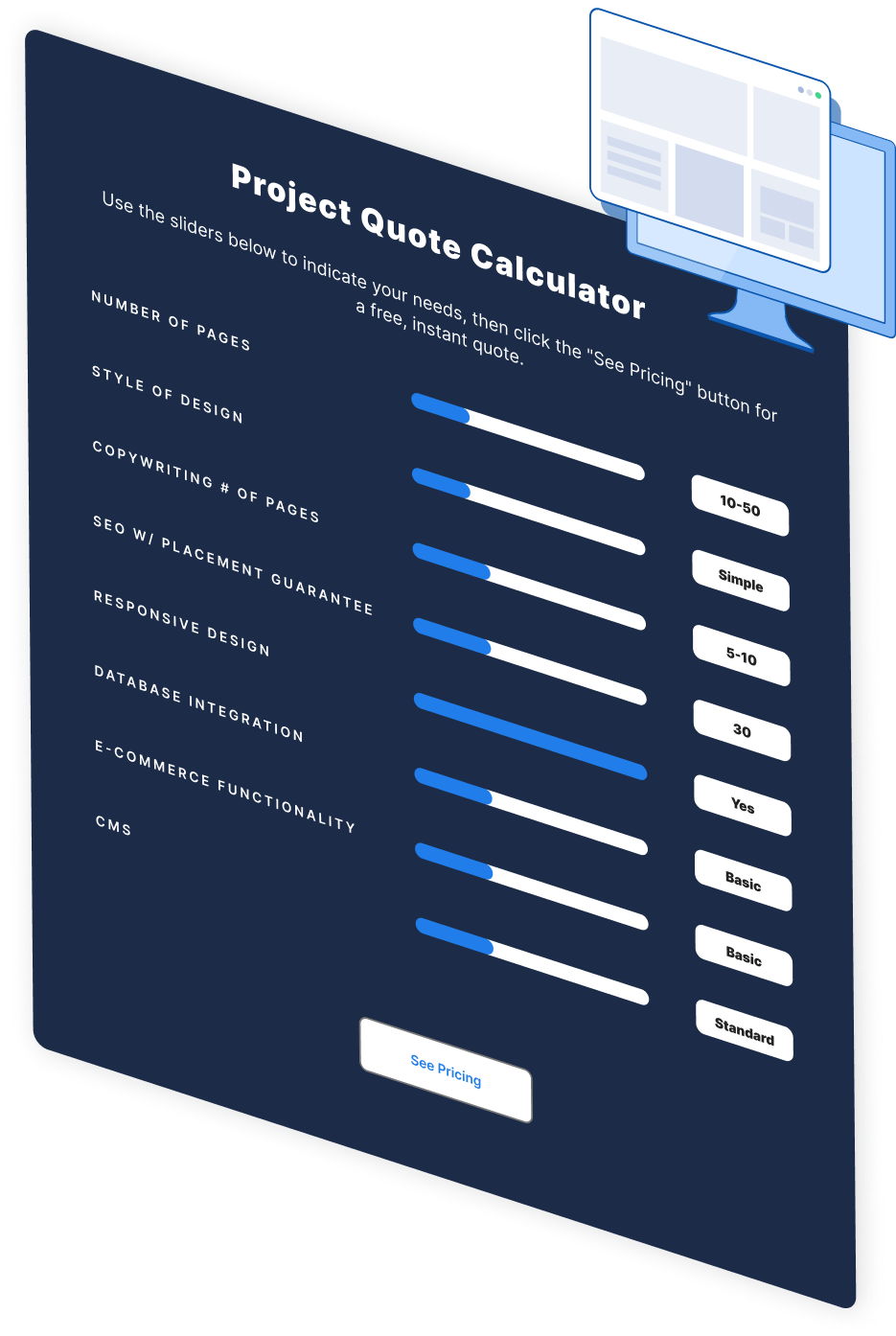

Make estimating web design costs easy

Website design costs can be tricky to nail down. Get an instant estimate for a custom web design with our free website design cost calculator!

Try Our Free Web Design Cost Calculator

Web Design Calculator

Use our free tool to get a free, instant quote in under 60 seconds.

View Web Design CalculatorMake estimating web design costs easy

Website design costs can be tricky to nail down. Get an instant estimate for a custom web design with our free website design cost calculator!

Try Our Free Web Design Cost Calculator