- Home

- Blog

- Web Design 10 Ways to Improve Your Web Page Performance

10 Ways to Improve Your Web Page Performance

-

10 min. read

10 min. read

-

William Craig

William Craig CEO & Co-Founder

CEO & Co-Founder

- President of WebFX. Bill has over 25 years of experience in the Internet marketing industry specializing in SEO, UX, information architecture, marketing automation and more. William’s background in scientific computing and education from Shippensburg and MIT provided the foundation for MarketingCloudFX and other key research and development projects at WebFX.

There are a million and one ways to boost your website’s performance. The methods vary and some are more involved than others. The three main areas that you can work on are: hardware (your web server), server-side scripting optimization (PHP, Python, Java), and front-end performance (the meat of the web page).

This article primarily focuses on front-end performance since it’s the easiest to work on and provides you the most bang for your buck.

Why focus on front-end performance?

The front-end (i.e. your HTML, CSS, JavaScript, and images) is the most accessible part of your website. If you’re on a shared web hosting plan, you might not have root (or root-like) access to the server and therefore can’t tweak and adjust server settings.

And even if you do have the right permissions, web server and database engineering require specialized knowledge to give you any immediate benefits. It’s also cheap. Most of the front-end optimization discussed can be done at no other cost but your time.

Not only is it inexpensive, but it’s the best use of your time because front-end performance is responsible for a very large part of a website’s response time. With this in mind, here are a few simple ways to improve the speed of your website.

1. Profile your web pages to find the culprits.

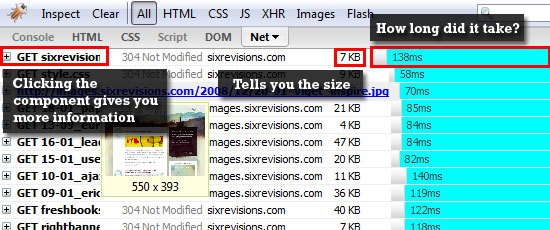

It’s helpful to profile your web page to find components that you don’t need or components that can be optimized. Profiling a web page usually involves a tool such as Firebug to determine what components (i.e. images, CSS files, HTML documents, and JavaScript files) are being requested by the user, how long the component takes to load, and how big it is.

It’s helpful to profile your web page to find components that you don’t need or components that can be optimized. Profiling a web page usually involves a tool such as Firebug to determine what components (i.e. images, CSS files, HTML documents, and JavaScript files) are being requested by the user, how long the component takes to load, and how big it is.

A general rule of thumb is that you should keep your page components as small as possible (under 25KB is a good target). Firebug’s Net tab (shown above) can help you hunt down huge files that bog down your website. In the above example, you can see that it gives you a break down of all the components required to render a web page including: what it is, where it is, how big it is, and how long it took to load.

There are many tools on the web to help you profile your web page – check out this guide for a few more tools that you can use.

2. Save images in the right format to reduce their file size.

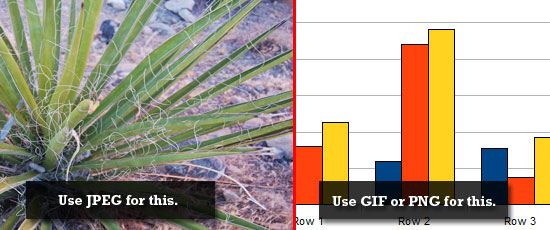

If you have a lot of images, it’s essential to learn about the optimal format for each image. There are three common web image file formats: JPEG, GIF, and PNG. In general, you should use JPEG for realistic photos with smooth gradients and color tones.

If you have a lot of images, it’s essential to learn about the optimal format for each image. There are three common web image file formats: JPEG, GIF, and PNG. In general, you should use JPEG for realistic photos with smooth gradients and color tones.

You should use GIF or PNG for images that have solid colors (such as charts and logos). GIF and PNG are similar, but PNG typically produces a lower file size. Read Coding Horror’s weigh-in on using PNG’s over GIF’s.

3. Minify your CSS and JavaScript documents to save a few bytes.

Minification is the process of removing unneeded characters (such as tabs, spaces, source code comments) from the source code to reduce its file size. For example: This chuck of CSS:

.some-class { color: #ffffff; line-height: 20px; font-size: 9px; }can be converted to:

.some-class{color:#fff;line-height:20px;font-size:9px;}…and it’ll work just fine. And don’t worry – you won’t have to reformat your code manually.

There’s a plethora of free tools available at your disposal for minifying your CSS and JavaScript files. For CSS, you can find a bunch of easy-to-use tools from this CSS optimization tools list. For JavaScript, some popular minification options are JSMIN, YUI Compressor, and JavaScript Code Improver.

A good minifying application gives you the ability to reverse the minification for when you’re in development. Alternatively, you can use an in-browser tool like Firebug to see the formatted version of your code.

4. Combine CSS and JavaScript files to reduce HTTP requests

For every component that’s needed to render a web page, an HTTP request is created to the server. So, if you have five CSS files for a web page, you would need at least five separate HTTP GET requests for that particular web page. By combining files, you reduce the HTTP request overhead required to generate a web page.

Check out Niels Leenheer’s article on how you can combine CSS and JS files using PHP (which can be adapted to other languages). SitePoint discusses a similar method of bundling your CSS and JavaScript;they were able to shave off 1.6 seconds in response time, thereby reducing the response time by 76% of the original time. Otherwise, you can combine your CSS and JavaScript files using good, old copy-and-paste‘ing (works like a charm).

5. Use CSS sprites to reduce HTTP requests

![]() A CSS Sprite is a combination of smaller images into one big image. To display the correct image, you adjust the

A CSS Sprite is a combination of smaller images into one big image. To display the correct image, you adjust the background-position CSS attribute. Combining multiple images in this way reduces HTTP requests.

For example, on Digg (shown above), you can see individual icons for user interaction. To reduce server requests, Digg combined several icons in one big image and then used CSS to position them appropriately. You can do this manually, but there’s a web-based tool called CSS Sprite Generator that gives you the option of uploading images to be combined into one CSS sprite, and then outputs the CSS code (the background-position attributes) to render the images.

6. Use server-side compression to reduce file sizes

This can be tricky if you’re on a shared web host that doesn’t already server-side compression, but to fully optimize the serving of page components they should be compressed. Compressing page objects is similar to zipping up a large file that you send through email: You (web server) zip up a large family picture (the page component) and email it to your friend (the browser) – they in turn unpack your ZIP file to see the picture. Popular compression methods are Deflate and gzip.

Compressing images helps significantly with page load time. This can really hurt a business’ website, especially if they are an e-commerce site with a lot of images, such as an auto parts retailer. If you run your own dedicated server or if you have a VPS – you’re in luck – if you don’t have compression enabled, installing an application to handle compression is a cinch. Check out this guide on how to install mod_gzip on Apache.

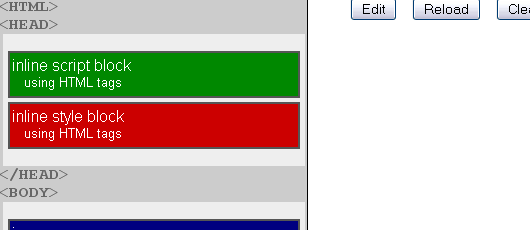

7. Avoid inline CSS and JavaScript

By default, external CSS and JavaScript files are cached by the user’s browser. When a user navigates away from the landing page, they will already have your stylesheets and JavaScript files, which in turn saves them the need to download styles and scripts again. If you use a lot of CSS and JavaScript in your HTML document, you won’t be taking advantage of the web browser’s caching features.

8. Offload site assets and features

Unloading some of your site assets and features to third-party web services greatly reduces the work of your web server. The principle of offloading site assets and features is that you share the burden of serving page components with another server. You can use Feedburner to handle your RSS feeds, Flickr to serve your images (be aware of the implications of offloading your images though), and the Google AJAX Libraries API to serve popular JavaScript frameworks/libraries like MooTools, jQuery, and Dojo.

Unloading some of your site assets and features to third-party web services greatly reduces the work of your web server. The principle of offloading site assets and features is that you share the burden of serving page components with another server. You can use Feedburner to handle your RSS feeds, Flickr to serve your images (be aware of the implications of offloading your images though), and the Google AJAX Libraries API to serve popular JavaScript frameworks/libraries like MooTools, jQuery, and Dojo.

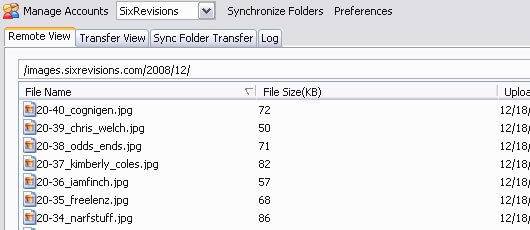

For example, on Six Revisions I use Amazon’s Simple Storage Service (Amazon S3 for short), to handle the images you see on this page, as well as Feedburner to handle RSS feeds. This allows my own server to handle just the serving of HTML, CSS, and CSS image backgrounds. Not only are these solutions cost-effective, but they drastically reduce the response times of web pages.

9. Use Cuzillion to plan out an optimal web page structure

Cuzillion is a web-based application created by Steve Souders (front-end engineer for Google after leaving Yahoo! as Chief of Performance) that helps you experiment with different configurations of a web page’s structure in order to see what the optimal structure is. If you already have a web page design, you can use Cuzillion to simulate your web page’s structure and then tweak it to see if you can improve performance by moving things around.

Cuzillion is a web-based application created by Steve Souders (front-end engineer for Google after leaving Yahoo! as Chief of Performance) that helps you experiment with different configurations of a web page’s structure in order to see what the optimal structure is. If you already have a web page design, you can use Cuzillion to simulate your web page’s structure and then tweak it to see if you can improve performance by moving things around.

View InsideRIA’s video interview of Steve Sounders discussing how Cuzillion works and the Help guide to get you started quickly.

10. Monitor web server performance and create benchmarks regularly.

The web server is the brains of the operation – it’s responsible for getting/sending HTTP requests/responses to the right people and serves all of your web page components. If your web server isn’t performing well, you won’t get the maximum benefit of your optimization efforts. It’s essential that you are constantly checking your web server for performance issues.

If you have root-like access and can install stuff on the server, check out ab – an Apache web server benchmarking tool or Httperf from IBM. If you don’t have access to your web server (or have no clue what I’m talking about) you’ll want to use a remote tool like Fiddler or HTTPWatch to analyze and monitor HTTP traffic. They will both point out places that are troublesome for you to take a look at.

Benchmarking before and after making major changes will also give you some insight on the effects of your changes. If your web server can’t handle the traffic your website generates, it’s time for an upgrade or server migration.

Further reading

- Read Aaron Hopkin’s Optimizing Page Load Time study.

- Check out Max Kiesler’s analysis of the return of investment gained by improving page loading times.

- Understand the importance of front-end performance by reading What the 80/20 Rule Tells Us about Reducing HTTP Requests on the Yahoo! User Interface blog.

- Cal Henderson’s piece on “Serving JavaScript Fast” shows you ways to optimize JavaScript files.

- artweb design shares a method and plugin for bundling CSS and JS in Rails.

Got more?

Like I said, there are a million ways and one to improve web page performance through on-page optimization. If you’ve got a bit more input on the points above or have additional tips you’d like to discuss, please share it with us in the comments.o

Related content

-

President of WebFX. Bill has over 25 years of experience in the Internet marketing industry specializing in SEO, UX, information architecture, marketing automation and more. William’s background in scientific computing and education from Shippensburg and MIT provided the foundation for MarketingCloudFX and other key research and development projects at WebFX.

President of WebFX. Bill has over 25 years of experience in the Internet marketing industry specializing in SEO, UX, information architecture, marketing automation and more. William’s background in scientific computing and education from Shippensburg and MIT provided the foundation for MarketingCloudFX and other key research and development projects at WebFX. -

WebFX is a full-service marketing agency with 1,100+ client reviews and a 4.9-star rating on Clutch! Find out how our expert team and revenue-accelerating tech can drive results for you! Learn more

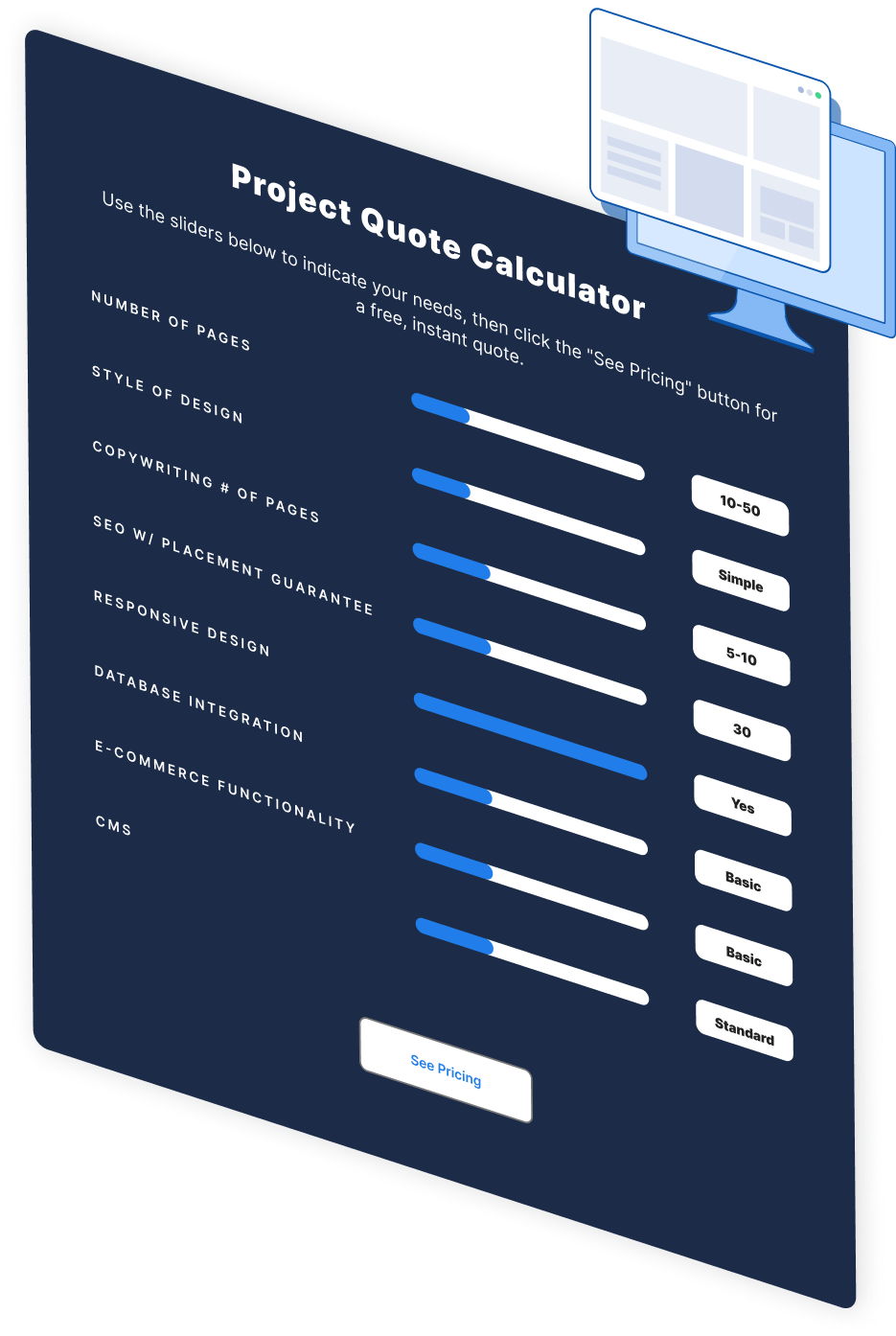

Make estimating web design costs easy

Website design costs can be tricky to nail down. Get an instant estimate for a custom web design with our free website design cost calculator!

Try Our Free Web Design Cost Calculator

Web Design Calculator

Use our free tool to get a free, instant quote in under 60 seconds.

View Web Design CalculatorMake estimating web design costs easy

Website design costs can be tricky to nail down. Get an instant estimate for a custom web design with our free website design cost calculator!

Try Our Free Web Design Cost Calculator